The technical stuff

AI-Driven Language Translation: A Technical Overview

Artificial Intelligence (AI) has revolutionized the field of language translation through the integration of advanced machine learning (ML) techniques, particularly in Natural Language Processing (NLP). At the heart of modern translation systems lie neural machine translation (NMT) models, which offer high accuracy, contextual understanding, and scalability for translating both textual inputs and full-length documents across a variety of domains.

1. Machine Learning Foundations of Language Translation

Language translation systems traditionally relied on rule-based and statistical machine translation (SMT) methods. These legacy approaches often struggled with idiomatic expressions and required extensive linguistic engineering. AI-enhanced translation now predominantly uses deep learning, specifically neural networks, to model and translate text.

1.1 Neural Machine Translation (NMT)

NMT replaces phrase-based SMT with an end-to-end neural network that reads and generates sentences. The dominant architectures include:

Seq2Seq (Sequence-to-Sequence) Models: Introduced the encoder-decoder paradigm using RNNs or LSTMs. The encoder maps an input sequence (source language) into a context vector, which the decoder uses to generate the output sequence (target language).

Transformer Architecture: Introduced by Vaswani et al. in 2017, this model removed the sequential bottlenecks of RNNs by using self-attention mechanisms. Transformers power models like Google’s T5, Meta’s NLLB, and OpenAI’s GPT series.

2. Core Components of AI Translation Systems

2.1 Tokenization and Subword Encoding

Source texts are tokenized using schemes like Byte Pair Encoding (BPE) or SentencePiece, which reduce vocabulary size and improve handling of rare or compound words. These tokenizers break down words into subword units, allowing the model to generalize better across morphologically rich languages.

2.2 Contextual Embeddings

Modern models leverage contextual embeddings (e.g., BERT, XLM-R) to capture the meaning of words based on surrounding text. These embeddings improve the translation quality of polysemous words and idioms by understanding the sentence-level context.

2.3 Attention Mechanisms

Attention allows the model to focus on relevant parts of the source sentence during translation. In the Transformer, multi-head self-attention enables the model to learn complex alignments between source and target sequences, which is critical for syntax preservation and semantic accuracy.

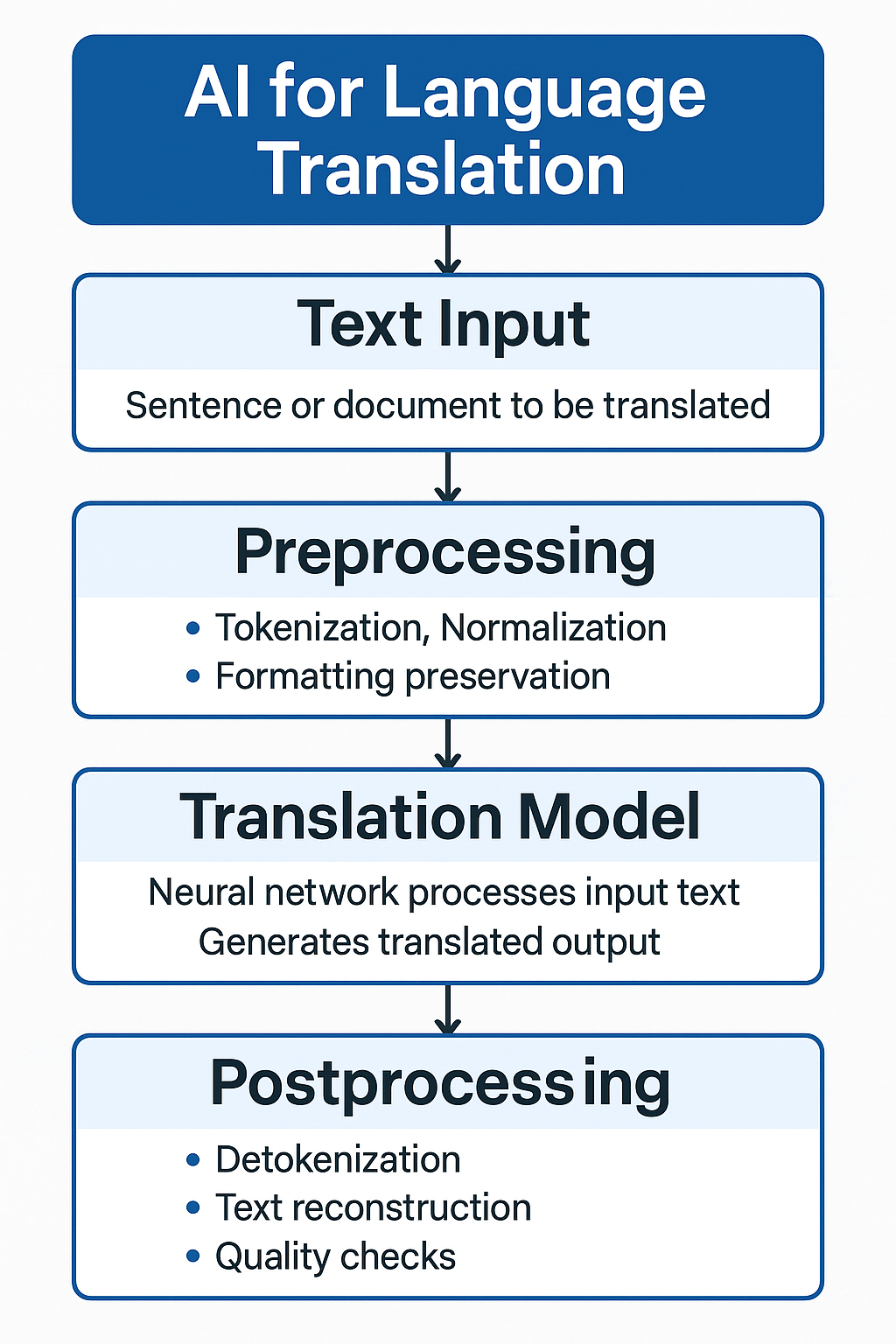

3. Document Translation Workflow

When translating large documents rather than individual sentences, the process involves additional components:

3.1 Document Preprocessing

Segmentation: Documents are split into linguistically coherent units (sentences or paragraphs).

Normalization: Text normalization includes handling punctuation, casing, and Unicode standardization.

Formatting Preservation: XML/HTML tags, document structure (headings, lists), and layout metadata are preserved using markup-aware tokenizers.

3.2 Contextual Coherence Across Sentences

For documents, maintaining contextual coherence across multiple sentences is essential. Advanced translation models use document-level NMT that incorporates previous and subsequent segments to maintain referential consistency (e.g., pronouns, named entities, and tenses).

3.3 Post-processing

Detokenization and Reconstruction: Translated text is detokenized and reintegrated into the original document format.

Alignment Verification: Tools like alignment matrices or BLEU/TER scores are used for quality assurance.

Named Entity Recognition (NER): Ensures consistency and accuracy for proper nouns and technical terms.

4. Training and Fine-Tuning Translation Models

4.1 Data Requirements

AI translation systems require large-scale parallel corpora (source-target sentence pairs). Public datasets include:

Europarl (parliament proceedings)

WMT (translation competitions)

OPUS (open parallel corpora)

4.2 Transfer Learning and Fine-Tuning

Pretrained multilingual models (e.g., mBART, NLLB-200) can be fine-tuned on domain-specific corpora, such as legal, medical, or technical texts, using supervised learning to improve terminology accuracy.

4.3 Reinforcement Learning and Human Feedback

Modern systems also incorporate reinforcement learning with human feedback (RLHF) or active learning loops, allowing models to adapt based on correction logs or reviewer input over time.

5. Quality Evaluation and Metrics

Common evaluation metrics include:

BLEU (Bilingual Evaluation Understudy): Measures n-gram overlap between generated and reference translations.

TER (Translation Edit Rate): Measures the number of edits needed to correct a translation.

COMET / BLEURT: ML-based metrics that consider semantic similarity, not just surface text.

Human Evaluation: Still the gold standard, especially for fluency, cultural accuracy, and domain-specific quality.

6. Deployment Considerations for Software Applications

6.1 Cloud-Based Translation APIs

Many companies integrate AI translation via APIs (e.g., Google Cloud Translate, Amazon Translate, DeepL API). These services abstract the ML complexity and offer scalability and multilingual support.

6.2 On-Premise and Offline Translation

For security-conscious organizations, translation models can be deployed on-premises using containers or embedded on edge devices. Lightweight Transformer variants (e.g., DistilBERT, TinyML models) make this feasible.

6.3 Integration with Applications

Real-time Translation: Live chat, email, or video subtitles.

Batch Processing: Translating entire repositories of documents.

CAT Tool Integration: AI engines working alongside Computer-Assisted Translation (CAT) platforms like SDL Trados or memoQ for human-in-the-loop translation.

7. Ethical and Legal Considerations

Bias Mitigation: Language models can inherit cultural or gender biases from training data.

Data Privacy: GDPR and other regulations impact the handling of sensitive or personal data in translation workflows.

Explainability: Increasing demand for interpretability in high-stakes fields like legal or medical translation.

Conclusion

AI-based language translation has moved from rule-based and phrase-based systems to powerful neural architectures that leverage massive datasets, attention mechanisms, and deep contextual understanding. Whether translating a sentence in real-time or an entire technical document, modern ML-powered translation systems can provide accurate, context-aware outputs that adapt to domain-specific language. For software companies building translation platforms, leveraging AI means creating faster, smarter, and more reliable tools—while also ensuring privacy, transparency, and ethical integrity.

How it works

Join our journey

Sign up to our newsletter to stay updated on project progress